CU Denver ORS CRC -- TABY -- A Teaching Assistant Bot for You -- towards creating a pedagogical toolkit for remote teaching by leveraging Machine Learning

Background: All educational institutions in the world have moved to remote instructions due to the COVID-19 pandemic that entails course instructors teaching live on a video conferencing platform where students sign in and interact with the instructor through audio and video capture devices built-in most computers. This ‘classroom-in-a-home/couch’ style transformation of learning platform raises variety of concerns in both instructors and students in terms of quality of learning. One obvious challenge educators face which affects the overall learning scheme during in-classroom lectures is that the instructors are not being able to ‘read the classroom’ and interface with students’ facial expressions, body language, and other overt form of communications that help them characterize the classroom dynamics – a major driving force for successful teaching to set up a positive classroom atmosphere where students feel comfortable learning and communicating with other students and with the instructor. More specifically, in a bigger classroom, having access to only the postage size facial impressions (whenever available) has proven not to be sufficient to carefully monitor the progress of the class session as well as the level of engagement/disengagement of the students.

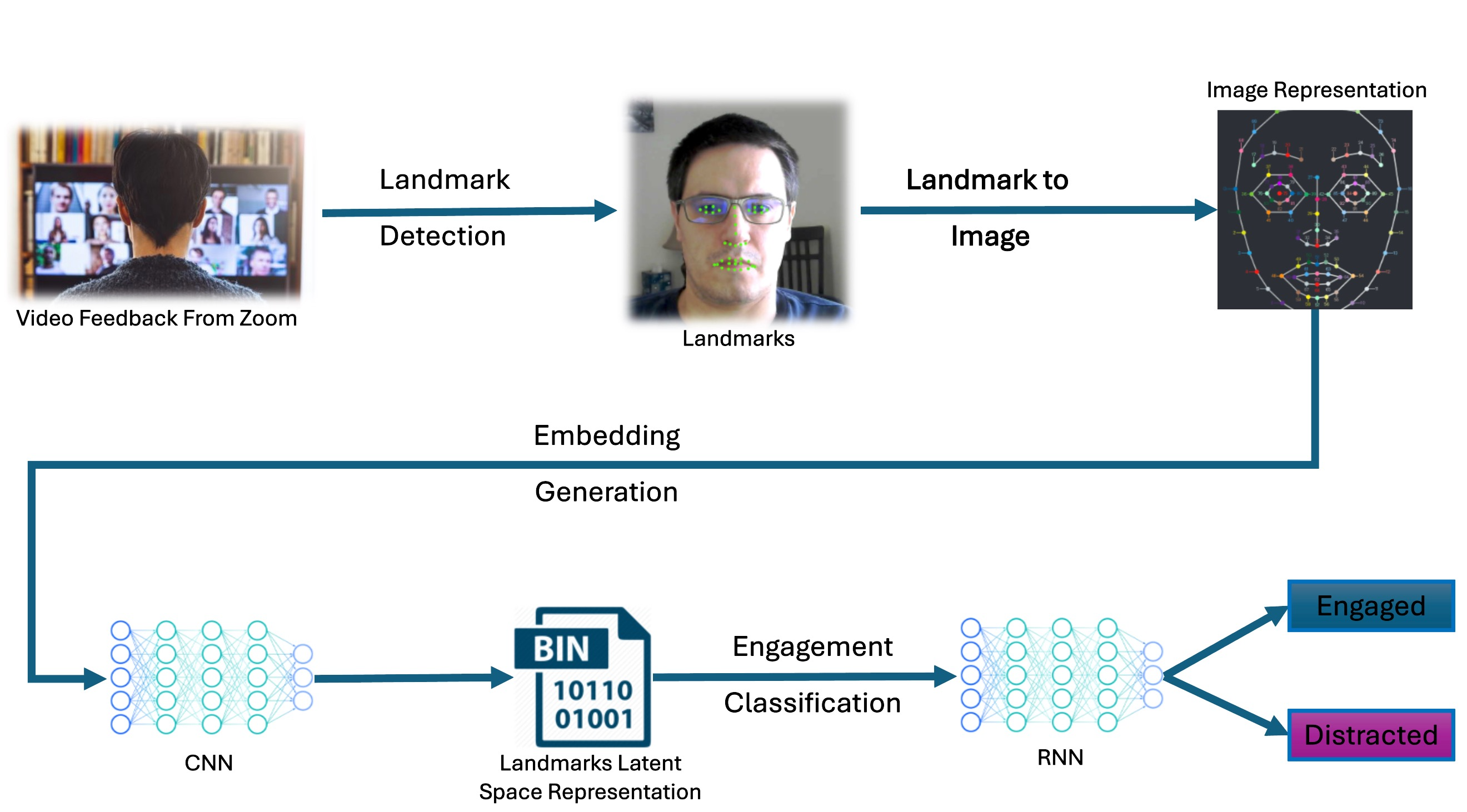

Proposed Collaborative Research and its impacts: In this project, we propose to investigate and develop an artificial intelligence (AI) platform on top of a commercially available video conferencing software to help instructors “read” their students’ expressions. The proposed platform will provide instantaneous feedback to the instructors during remote instructions. We plan to accomplish this by leveraging the incoming audio and/or video streams of the attendees who would agree to make the stream(s) available. A facial landmark detection followed by a generalizable recurrent neural network-based event categorization method that we design and develop will measure the level of attention of the attendees. We will leverage the existing application programming interfaces (APIs) of the commercial video conferencing tools to obtain the multimedia input streams allowed by the attendees during a class-session so that each can be fed to the proposed machine learning module running in parallel to provide summary statistics to the main module connected to a dashboard application of the instructor to be overlaid on top of the existing video conference tool. The dashboard is going to be a single pictorial visual guide that provides anonymized real-time summary of students’ engagement/disengagement to the materials during lecture. This pedagogical toolkit could also help identifying patterns and characteristics amongst the students to help make decisions related to how fast to cover a topic, students’ engagement/disengagement, and/or other instructional tool development. Knowing the real time summary statistics on the dashboard overlay, the instructor could change pace of the lecture, revisit harder topics, encourage group discussions, tell examples/stories in ice-breaking situations whenever appropriate. Then, utilizing the data obtained as the summary statistics about the classroom dynamics we propose a recommender system fueled by deep neural network algorithms for the instructor that will suggest her/him an intervention on the proposed dashboard to adapt during a live lecture (e.g., “It’s time for a break”, or “Time for a pop quiz”, etc.).

Data management plan: Submission of IRB/COMIRB application (Protocol #: 20-2803) for pertinent data collection.

Sponsor Creative Research Collaborative Grants of the Office of Research Services at University of Colorado Denver ORS link

Collaborators

- Ashis Kumer Biswas (PI)

- Geeta Verma (Professor at School of Education and Human Development, CU Denver)

- Elizabeth Coughanour (Post-doc, SEHD, CU Denver)

- Javier Pastorino (PhD Student, CSE, CU Denver)

- Biniyam Yohannes (Undergraduate Student, CSE, CU Denver)

Presentations and Publications of Research Products

- April 2021: Talk at the

CRC Talk Seriesat CU Denver pptx

-